The new accessibility features coming later this year will include Eye Tracking.

Music Haptics.

The vocal snippets are vocal.

The vehicle moves.

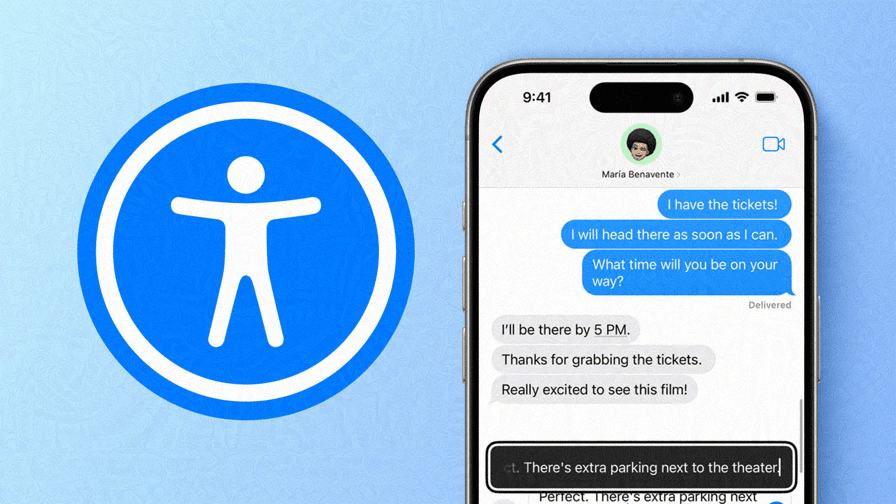

Mac users will be able to modify VoiceOver keyboard shortcut, while the Vision Pro will have systemwide LiveCaptions, Smart Invert, and Dim Flashing Lights.

Eye Tracking on the iPad and the iPhone is powered by artificial intelligence and will allow users to use their eyes to navigate. Eye Tracking uses a front-facing camera to set up and calibrate in seconds, and with on-device machine learning, all data used to set up and control this feature is kept securely on the device. Eye Tracking works across iPadOS and iOS apps, and doesn't require additional hardware. Eye Tracking gives users the ability to navigate through the elements of an app and use Dwell Control to access additional functions with their eyes.

Music Haptics is a new way for users who are deafness or hard of hearing to experience music on iPhone. The Taptic Engine in the iPhone plays taps, sounds, and vibrates to the music if the accessibility feature is turned on. Music Haptics is an Apple Music catalog feature that will allow developers to make music more accessible in their apps.

The Vocal Shortcuts program will allow users to assign "custom utterances" that can be used to launch and complete complex tasks.

The feature is designed to reduce motion sickness while looking at an iPhone or iPad's screen in a moving vehicle: With Vehicle Motion Cues, animated dots on the edges of the screen represent changes in vehicle motion to help reduce sensory conflict without interfering with the main content The feature can be turned on or off in the Control Center, and can be set to show automatically on the iPhone. Read our coverage of this feature in its entirety.

Voice control, color filters, and sound recognition will be added to CarPlay.

visionOS 2 will allow users who are deaf or hard of hearing to follow along with the spoken dialogue in live conversations and audio from apps.

When typing in a text field, hover typing will show larger text.

VoiceOver will include features for users who are blind or have low vision. Magnifier will have a new reader mode and the option to launch detection mode with the action button. The new option to choose different input and output tables will be available for the first time. Users with low vision will benefit from Hover Typing, which shows larger text when typing in a text field, and in a user's preferred color and style. Live Speech will allow users with difficulty pronouncing or reading full sentences to create a Personal Voice. Virtual Trackpad for AssistiveTouch allows people with physical disabilities to use a small region of the screen as a trackpad. Apple is expected to announce more accessibility features later this year, including Voice Control which will offer support for custom vocabularies and complex words.